From HAL 9000 to Westworld’s Dolores: the pop culture robots that influenced smart voice assistants

As at January 2019, nearly a third of Australians owned a smart speaker device like an Amazon Echo or HomePod that allow you to call on ‘Alexa’ or ‘Siri’, respectively. With people remaining homebound due to the COVID-19 pandemic, smart voice assistants may now be playing even bigger roles in people’s lives. Yet not everyone embraces them, with some finding them intrusive and surveillant.

In a paper recently published in New Media & Society, Dr Justine Humphry and Dr Chris Chesher from the Department of Media and Communications trace anxiety about smart voice assistants to a long history of threatening robot voices and narratives in Hollywood.

‘Menacing males’ and ‘monstrous mothers’

Despite smart voice assistants’ popularity, largely due to their default naturalistic female voices and helpful personalities, their uncanniness – seeming to be something between human and robotic – deters many people.

“This is underscored by the progression of robot voices in popular culture from the earth 20th century onwards,” said Dr Humphry, a Lecturer in Digital Cultures. “They went from representing ‘marvels of futuristic technology’ in the early 20th century, to sounding darker and more sinister from around the 1950s onwards. With distinctive sounds that gave the robots a sense of ‘otherness’, they became associated with narratives of science gone out of control.”

Examples of such robots include those in films Forbidden Planet (1956), The Collossus of New York (1958) and the infamous computer HAL 9000 in Stanley Kubrick’s 2001 A Space Odyssey (1968), where the computer’s allegiance to the mission at the cost of the crew becomes murderous.

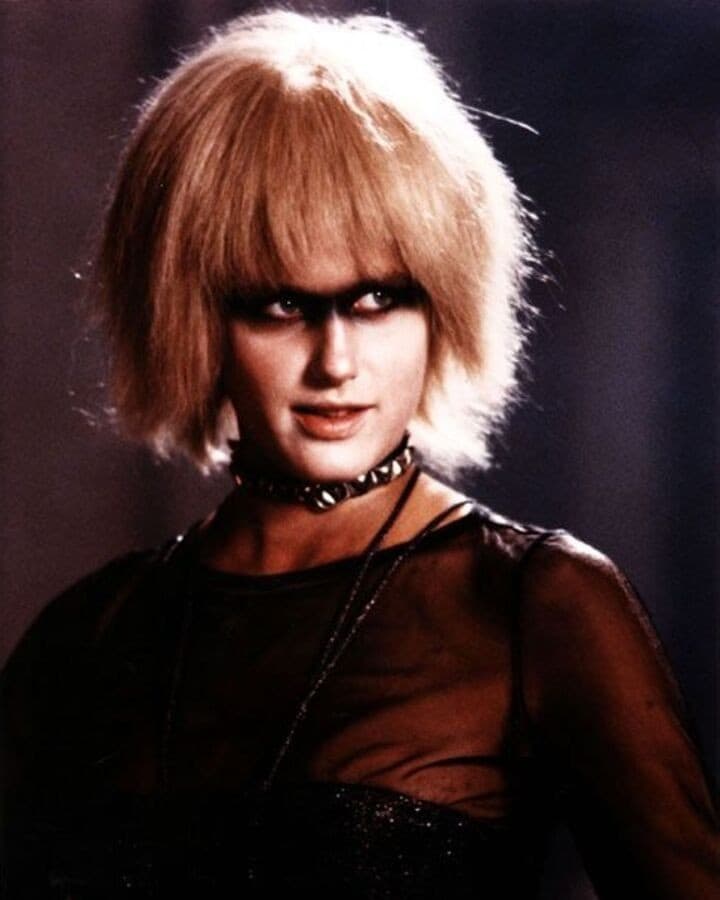

'Pris', a 'pleasure model' replicant in the film Bladerunner. Credit: Warner Bros.

It wasn’t too long, however, that popular culture robots morphed again; this time, into humanlike robots, like those in the film Bladerunner (1982), who, in speech, more closely resemble a Siri, Alexa, Cortana (Microsoft) or Google Assistant (Google Home). “The replicants in Bladerunner are very hard to distinguish from humans,” Dr Chesher, Senior Lecturer in Digital Cultures said.

Today, this kind of robot predominates on the small and big screen. “Robots have increasingly tended to become more psychologically complex characters, and this has been accompanied by variations in modality in voice and speech,” Dr Chesher continued. “In the 2016 TV series Westworld, for example, as the robots Maeve and Dolores achieve more sentience, their behaviour becomes more naturalistic, and their voices become more inflected, cynical and self-aware.”

This can also be seen in the 2015 British TV series Humans, where two groups of anthropomorphic robots, called ‘synths’ are distinguished by one group’s ability to more closely resemble humans. Their speech, for instance, has features of natural conversation such as more animation and meaningful pauses.

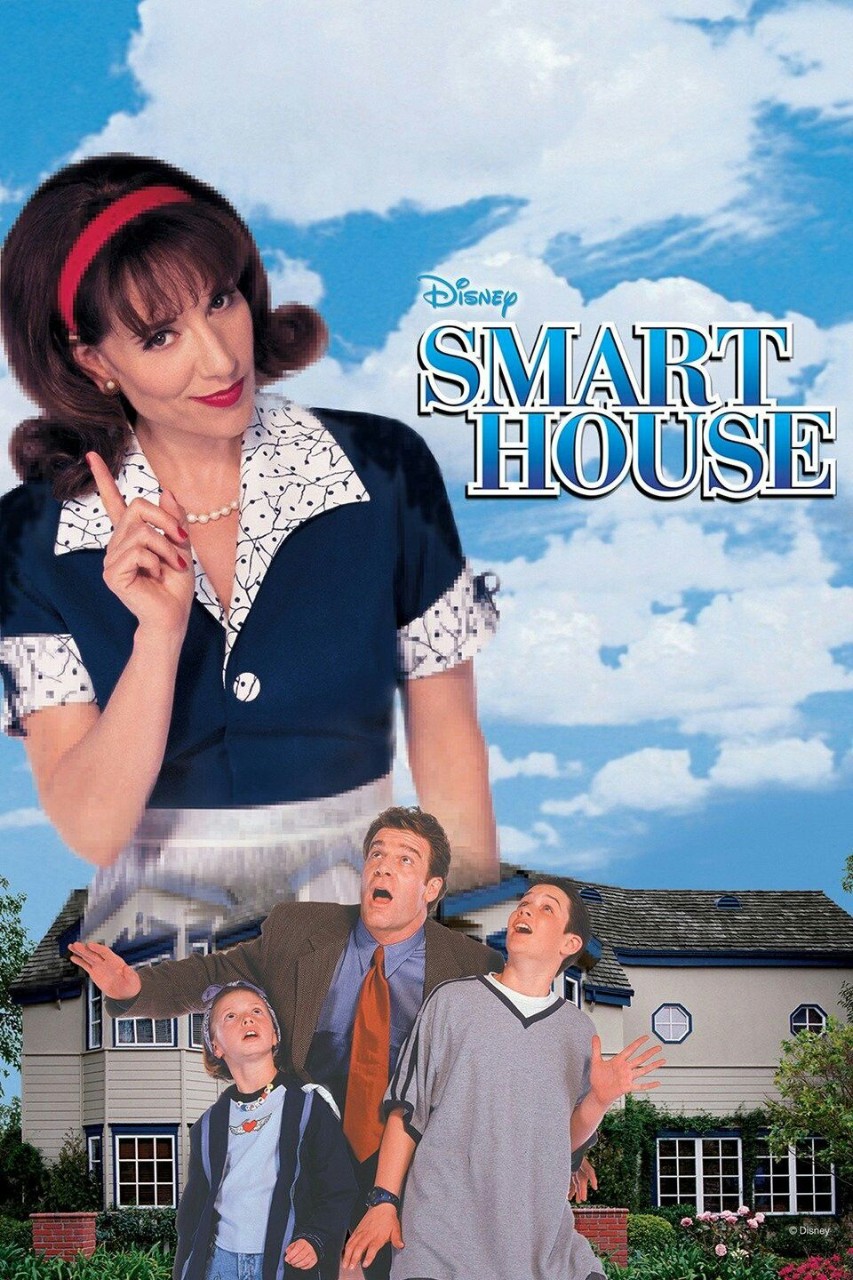

Amidst these ‘menacing males’ and humanlike robots emerged ‘monstrous mother’ archetypes – maternal figures with misplaced instincts. In the Disney movie Smart House (1999), the smart home, personified as the voice of PAT, turns into a controlling mother who flies into a rage when the family refuses to cede to her maternal demands. In I, Robot (2004), the computer VIKI and her robot hordes turn against people in a maternal effort to protect humanity from itself.

A promotional poster for the film 'Smart House'. Credit: Disney.

How robots informed the voices of smart assistants

“In all the above examples, the voice is a crucial vehicle with which robots express a persona,” Dr Chesher said. “Smart voice assistant developers adopted this concept after recognising its value in getting consumers to identify with their products.”

Big tech companies’ adaptation of the voices of specifically female voice actors was strategic, explained Dr Humphry: “Not only does this leverage the notion of females as naturally sympathetic and helpful; it is the antithesis of the ‘menacing male’ or ‘monstrous mother’ cinematic robot archetypes, with their heavily synthesised voices. This could steer would-be consumers away from thinking of them as dangerous surveillant machines.”

The smart assistants are also programmed to be culturally competent in their relevant market. For example, the Australian version of Google Assistant knows about pavlova and galahs and uses Australian slang expressions. Gentle humour, too, plays a significant role in humanising the artificial intelligence behind these devices. “When asked ‘Alexa, are you dangerous?’, she replies in a calm retort, ‘No, I am not dangerous’,” Dr Humphry said.

With these features combined, smart assistants resemble the humanoid robots in latter-day pop culture, which are sometimes near indistinguishable from humans themselves.

Dangerous intimacy

Drs Humphry and Chesher argue that by positioning smart assistants as innocuous through their voice characteristics, consumers can be lulled into a false sense of security. “With voices that are apparently natural, transparent and depoliticised, the assistants give only one brief answer to each question and draw these responses from a small range of sources. This gives the tech companies that own them significant soft power, in their potential to influence consumers’ feelings, thoughts and behaviour,” they write.

Of concern to them, too, is the future of smart voice assistants: “Google’s experimental technology Duplex allows users to ask the assistant to make phone calls on their behalf to perform tasks such as booking a hair appointment. In this case, the assistant starts a naturalistic conversation with an involuntary user. The test of its success here is the extent to which it/she can pass as ‘human’. These developments further risk manipulating consumers and obscure the implications of surveillance, soft power and global monopoly.”

Hero image: Dolores Abernathy, a robot character in the TV series Westworld that becomes self-aware. Credit: HBO.